What is Coinweb?

Coinweb - Blockchain tech's missing layer and the key to unblocking fundamental bottlenecks

What Coinweb is

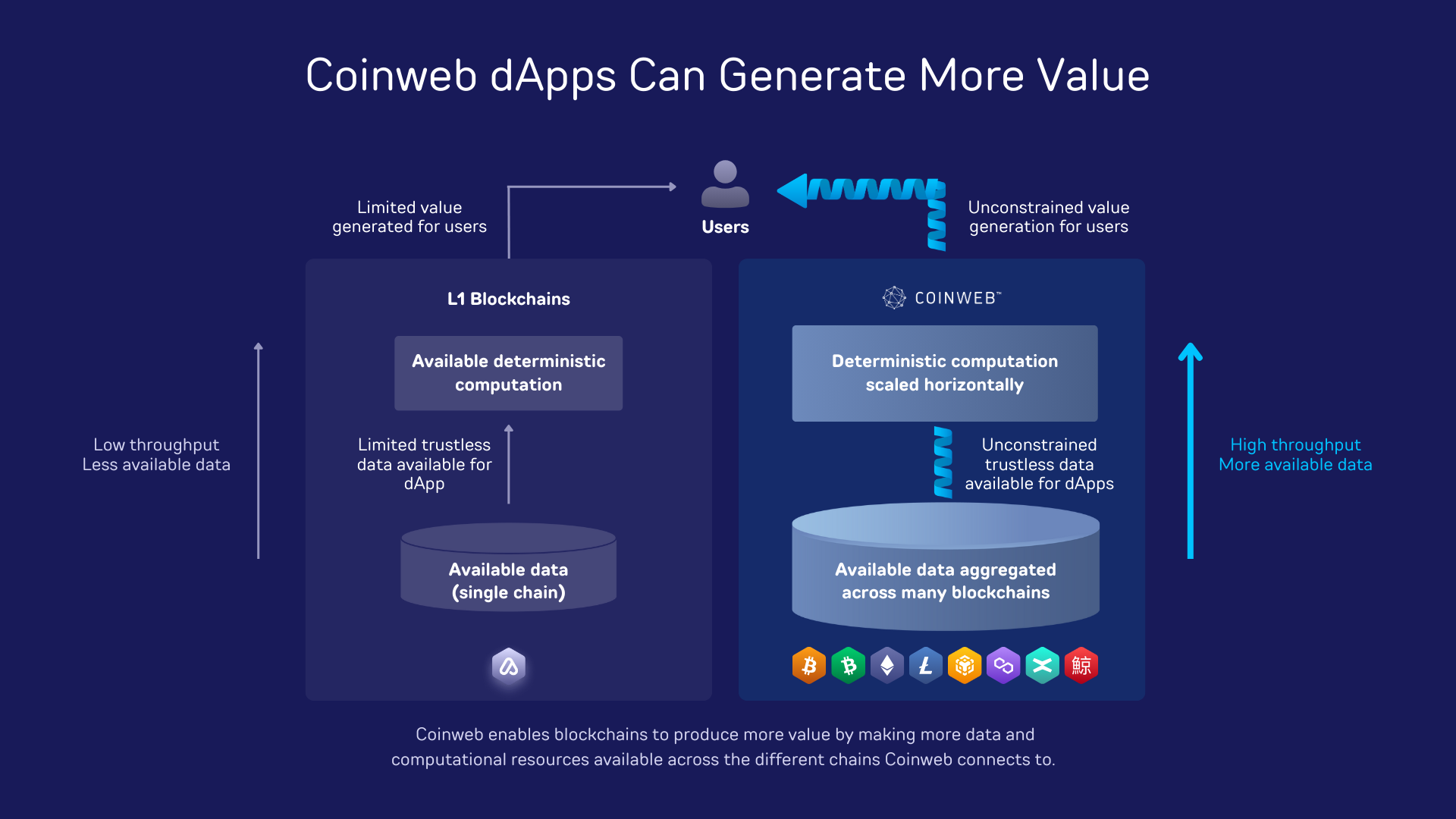

The Coinweb protocol is an entirely new approach to blockchain technology. Rather than building a new blockchain or extending an existing blockchain architecture, the Coinweb protocol allows existing and new blockchains to be used in a very different way than how they are used now. Coinweb combines different and incompatible blockchains and allows dApps to not only combine the best properties of every blockchain but also supercharge the connected blockchains, unlocking a range of new applications otherwise impossible to implement.

Hard limits to growth

It is network effects that determine the value of a dApp. Satisfied users draw in new users, with more users the dApp becomes even more useful since the number of counterparties for interaction increases. As long as the dApp can serve new users' demands, the network effect will accumulate. However, unlike centralised applications that can easily scale their infrastructure as their user base grows, dApps (decentralised applications) are bound by the capacity of the blockchain infrastructure they use. When dApps start to go viral (and they do!), the growth is quickly stopped by the inability of the underlying blockchain to handle the growing traffic. The fees become unacceptably high and users are no longer satisfied with the dApp. More about network effects and generated value here

Current approaches

There have been many attempts to solve this problem. The first approach was to create new blockchains. This is not a bad approach, and it has resulted in many new and interesting blockchains, driving the L1 technology forward. But it is not without issues. The first one is that the large number of new blockchains makes them difficult to bootstrap. The second one is that even with a significant capacity increase, they are still rigid and difficult to upgrade. They are also often specialized to solve one specific problem, for example, transaction count per second, while other bottlenecks such as compute constraints are overlooked. Then some solutions specifically target compute constraints, rollups and various other L2 solutions are examples of that. Common problems for all these scaling solutions are that they are rigid and have limited or no access to other blockchains' data, making bootstrapping harder.

Limited data availability

The bootstrapping issue mentioned above ties into the second bottleneck for dApps to go viral, lack of data availability. Without access to other blockchains' data, there are few counterparties for users to interact with, and the threshold for a dApp to reach viral growth becomes higher. This is of course a well-known problem, and there are many bridges and interoperability projects that can move data between blockchains, but the current solutions are detached from the security of the bridged blockchains, leading to increased risks, limited composability and bandwidth.

What would be the ideal solution?

The ideal solution for a dApp would be to have access to all the data from many different blockchains, be able to combine the transactional capacity from many chains, and run complex smart contracts capable of processing all the valuable data and user transactions driving viral growth. Importantly, to underpin the network effects, the output of the dApp should maintain the security properties of the original L1 data.

Correct layering of building blocks

It turns out, that this is indeed possible. The key to achieving this is to restructure flawed implementation patterns that cause unnecessary overhead and security issues. By introducing new primitives, it has been possible to create a new layering of blockchain infrastructure. This layering not only solves the immediate bottlenecks for dApp adoption but also provides a framework for future-proofing dApps, increasing their life cycle and growth potential.

New primitives

The Coinweb protocol utilises and makes new primitives available for dApp development, the main additions are described below.

Proof and verification

For blockchains to work, there must be a way for participants in the network to verify and prove information using computer software. There are multiple mechanisms in use and they are all built using various cryptographic primitives to ensure provable validity.

A very commonly used mechanism in blockchain tech is distributed consensus. Distributed consensus is a form of voting mechanism that allows a network to agree on sets of data according to a predefined set of rules, where the validity of the outcome can be verified by any network participant using cryptographic functions.

Distributed consensus is a very powerful mechanism that can create verifiable outputs from randomly ordered, unpredictable inputs. It is a necessary component in all decentralised blockchain networks and has therefore maybe not surprisingly become almost the default mechanism for both proving and verifying blockchain data.

Since distributed consensus requires active participation from a large number of participants to be secure it is an expensive and slow mechanism. Distributed consensus used indiscriminately leads to frail and ineffective blockchain infrastructure, incapable of meeting the scaling and security demands necessary for large-scale adoption of dApps.

RDoC (Refereed Delegation of Computation)

RDoC is a proof protocol with different characteristics than that of distributed consensus. While distributed consensus can be used on randomly ordered, unpredictable input data, RDoC can only be used to prove information derived deterministically from static immutable data. That means that RDoC can only prove and verify computations that always give the same result every given the same input. However, while it can not do all that distributed consensus can do, what it can do it can do very well! And it turns out that in many cases, RDoC does exactly what is needed and much more efficiently than with current setups. The most important properties of RDoC that allow the new layering of blockchain infrastructure are:

- RDoC moves verification out of the L1 blockchains. Unlike L1 verification which is directly tied to consensus, or L2 verification for rollups that happen in L1 smart contracts, RDoC verification happens at the edge, meaning that light clients such as wallets and dApp frontends can verify the validity of computations themselves. This is key for efficient decoupling of computation from the underlying consensus and data availability layers. Since verification is no longer tied to a specific consensus system, interoperability and blockchain-agnostic computation can now be implemented in L2 expanding data availability for dApps to include any combination of blockchains.

- RDoC is very effective. RDoC clients need only 1 honest node in the network to be able to determine the correct result. Compared to POW or POS systems that require a majority or super-majority of honest nodes, RDoC requires only a few nodes to achieve the same security as large POW or POS systems. This means that much fewer nodes will have to replicate the computations so that smart contracts can be much more complex and run at much lower cost.

- RDoC is lightweight and flexible. Compared to Zero Knowledge-based systems where the computational overhead grows exponentially with the complexity of the underlying computations, RDoC can be adapted to include many different consensus systems with low (logarithmic) overhead. You can find more info about RDoC here

Data availability

The main drawback with all current scaling solutions is that the increased scalability comes at the cost of sacrificing data availability from existing blockchain networks. This is true both for new L1 blockchains as well as L2 systems such as rollups. While the increased scalability yields lower fees and more room to grow, the lack of access to accumulated secure blockchain data reduces the number of counterparties for users to interact with and hence also the usefulness of dApps built on top of these systems. Currently, this problem is partially mitigated by blockchain bridges and other interoperability projects functioning as bridge hubs between different blockchains. Due to the large demand for interchain data availability, the current interoperability infrastructure has gained significant traction and is an important contribution to blockchain ecosystem growth. However, these solutions invariably introduce tradeoffs that slow down the growth of network effects.

- Commonly, they introduce additional consensus layers that weaken the security and value of the bridged data.

- Data are only indirectly accessible through user-initiated actions, or specific rigid interfaces with narrow scope.

- Incompatible solutions make composability low, limiting innovation and further expansion.

Inchain architecture and blockchain agnostic platform token.

The native coins of L1 blockchains serve as keys for combining and appending data in their secure data availability layer. To unify data availability across independent blockchains, a similar uniform key has to be available to access all the connected chains. The Coinweb protocol works by providing both a uniform format for embedding information inside different blockchains and a uniform execution environment on top of each connected blockchain. This is what constitutes the Coinweb protocols blockchain-agnostic layer. Since Coinweb's platform token, CWEB is blockchain agnostic, it can now be used to provide direct access and the ability to combine the data from all the connected chains, in much the same way as native coins on single chains. Read more about the Inchain architecture here

Reactive smart contracts are data-driven, adding another dimension to data availability.

Decoupled from L1 consensus and data availability layers, Coinweb smart contracts can self-activate and run indefinitely as long as they have a CWEB gas balance. These smart contracts can also monitor L1 and react to changes autonomously. Since Coinweb has removed the need for interchain consensus layers, all interchain operations are consistent. Combined with the reactive smart contracts full access to L1 data this becomes extremely powerful. Data-driven dApps can now be deployed on top of all connected blockchains, effectively unifying their data-availability layers and unlocking their combined network effects.

Read more about Coinwebs reactive smart contracts here

Consistency and liveness

The Coinweb protocol allows dApps to operate without strong coupling to any specific blockchain, which gives them several advantages compared to dApps that are hardcoded onto single blockchains. For this capability to be stable across any number of blockchains, cross-chain operations must be consistent. Such consistency is impossible to achieve if interchain consensus layers are used.

Cross-chain consistency

Consistent cross-chain operations are necessary for blockchain interoperability to truly scale. Without guaranteed cross-chain consistency, the system will become increasingly unstable as more blockchains are connected. Coinwebs protocol-level settlement layer uses a combination of a state propagation graph and L2 reorg capability to enable both consistent and stable interoperability.

Aggregated liveness

The combination of reactive smart contracts and consistent cross-chain operations enables Coinweb dApps to obtain additional valuable properties. The Coinweb execution environment operates independently from the underlying blockchain state changes. Coinweb dApps can use this property to monitor operational data from different blockchains and optimize blockchain utilisation accordingly. For example, if a chain gets saturated and gas prices spike, the dApp can direct transactions to a chain with free capacity and lower gas fees. This increases the practical liveness of the dApp. In the event that a blockchain stops producing blocks or in other ways becomes inoperable, the dApp can use pre-configured fallbacks to seamlessly move operation to a functional blockchain.

To learn more about how Coinweb unblocks your dApps' limitations for value generation can be found here. (Recommended read!)